DIY ChatGPT plugin connector

After being on the ChatGPT plugin waiting list for a few weeks I thought of trying out connecting an app to ChatGPT myself. There is Langchain which can do the same thing and much more, and I’m pretty sure there are dozens more similar projects, but I wanted to connect things on my own and see what happens.

TLDR:

In case you are new to ChatGPT plugins, it’s a way of connecting external services to the language model of ChatGPT in such a way that users can instruct to perform tasks in natural language. For instance, you can use a plugin to read and reply to emails. There are already many such plugins out there.

I didn’t find any documentation on how exactly ChatGPT plugins work internally, but there are enough details on how to build a web application in such a way it can interface with ChatGPT as a plugin. So this gives a good enough outline to think of a solution to build it ourselves. Let's look at what we know.

The core of ChatGPT is a Large Language Model (LLM) which is trained on a massive dataset. As I understand, it wouldn’t be wrong to think of it is as a very advanced auto-complete model.

LLMs take natural language inputs and return natural language outputs in text form.

Plugins are web services that expose a REST API. There should be an OpenAPI documentation and a standard manifest file named ai-plugin.json which is also accessible through a URL common to all the plugins. This file contains details of the plugin.

To register a plugin we have to provide our plugins host.

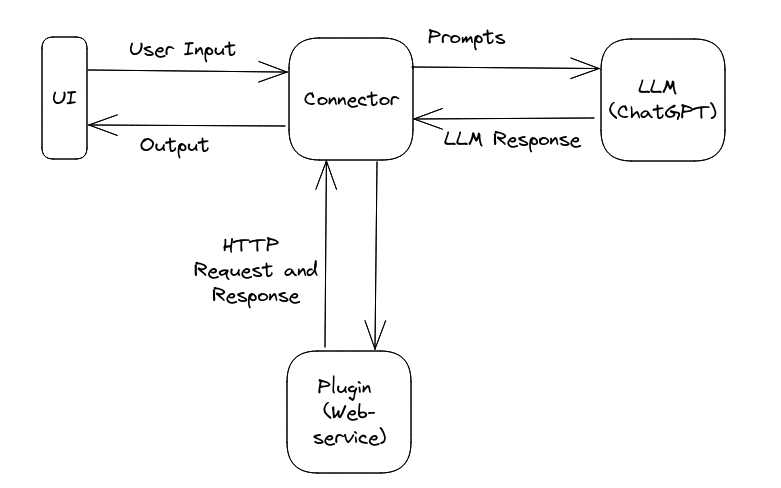

So, at the time of registering, ChatGPT must be reading the ai-plugin.json file and the OpenAPI documentation and persisting that information so that whenever a related request comes it invokes the relevant API endpoint. So, here’s a workflow that just might work.

When an app registers, read it’s purpose and persist (should be in a database, but for this simple plugin it’s only saved in memory).

When a user input is received first check if it’s related to a plugin, and if so find the relevant plugin API and call it. This is trickier than it sounds, but we will get to it.

Process the user input together with the API's response and return the reply to the user.

Persisting the purpose of the plugin

Step 1 is straightforward. There is literally a field called “description_for_model” in the ai-plugin.json file so it’s just a matter of reading it and storing it against an identifier for the plugin. It would be a good idea to summarize it, but for the simple plugin I connected, that wasn’t necessary. This is how it would look in a database table.

| id | app_name | purpose | API doc |

| 0 | reminder | create, edit, view and delete reminders | openapi: 3.0.0..... |

| 1 | read and send emails | …. | |

| 2 | …… | …… | …. |

Getting users intention

For each user input, we have to check if its intention is to do a task related to any of the registered apps. For this, we have to prompt the LLM asking to check the intention. It took me a couple of attempts to come up with the following prompt but it seems to work accurately.

"role": "user","content":

"""This is a list of intentions.

{<A list of purposes for which the registered plugins are used.

Straght from the 1st and 3rd columns of the above table>}

select the matching intention of the following phrase from the above list:

The phrase: {<User input goes here>}

Respond only with the number of the matching option.

If there is no match, say 'no match'.

Do not return more than one intention.

It deviates from the instructions a little and returns more than the number, but it's not difficult to extract the relevant app using simple string operations in Python.

Calling the plugin API endpoint

First of all, the LLM can’t call the API, but it doesn’t stop it from telling us how to make the request, and that’s what we are utilizing here. We prompt the LLM to give us the information to make a request by referring to the user input and the API documentation. This is the prompt that finally worked for me.

"role": "user",

"content": """

Current date and time is {<Current date>}

You are a resourceful personal assistant. You are given the below API Documentation:

{<API Doc goes here>}

Using this documentation, send following request data in JSON format for making a request to call for answering the user question.

Use the following as JSON object keys "host", "method", "headers", "parameters", "parameter_location".

You should build the request data object in order to get a response that is as short as possible, while still getting the necessary

information to answer the question.

First check if you have all the information to generate the request. If not, ask for them and do not try to

generate the request data object.

Pay attention to deliberately exclude any unnecessary pieces of data in the response.

Question:{<User question>}

Request data:{{

url:

method:

headers:

parameters:

parameter_location:

}}

Why have I included the current date? If you have been curious enough to ask the date or time from ChatGPT, you might know that it doesn’t have that information. It makes sense because an LLM only knows about the data on which it's trained. It has no way of knowing the current date/ time or accessing it from the system on which it runs unless it’s given. Since the example plugin is about setting reminders, I found it to be useful because a user can just say “Set a reminder for next Saturday” and the system will just know when exactly next Saturday is.

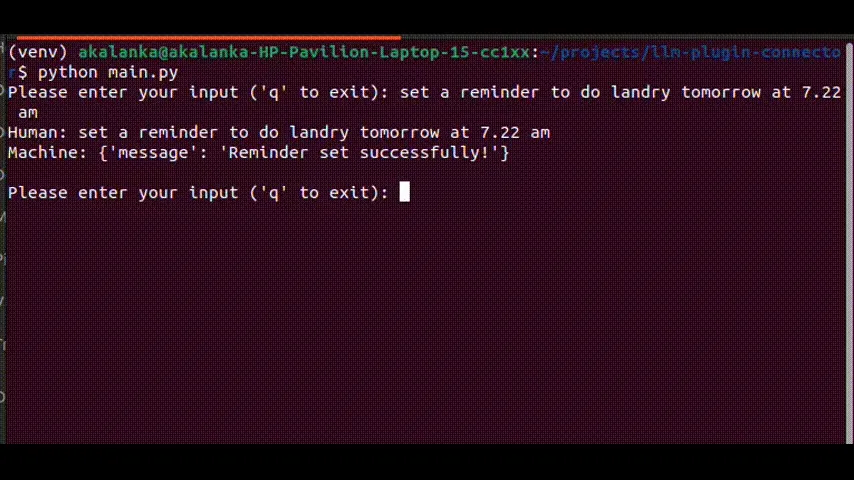

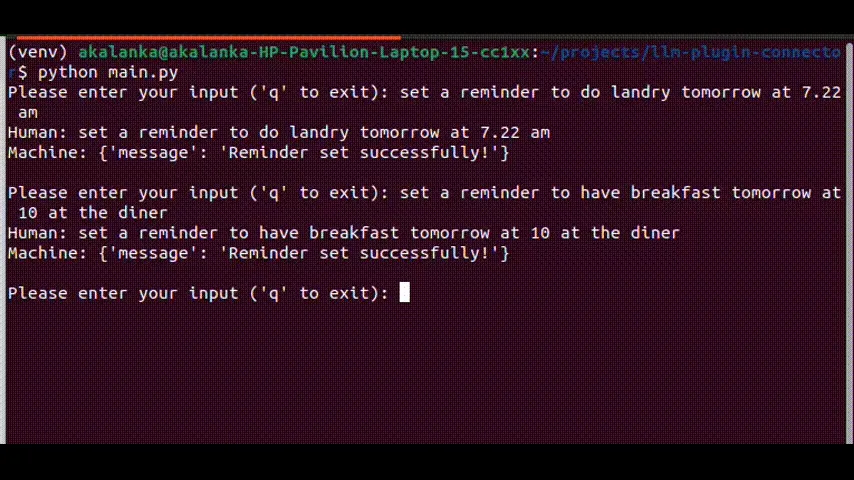

Calling the plugin API

This is a simple Python script that extracts the JSON object from the output of the LLM, builds a request and executes it. Once we get the response we have two options, either to return that to the user or send it back to the LLM with the context of the original user request. It really depends on the use case. To generalize it I used a simple hack. If the request method is GET, it sends response data back to the LLM with the original input from the user. If the request method is POST, it’s usually not intended for getting information, but for sending information to the plugin web service. So in the case of POST requests, it returns the response directly to the user. This was enough for the simple reminders app which only has two API endpoints. If there are more plugins and more API endpoints this part is probably going to need some improvements. I would love to hear your views on this approach. Especially on how to improve the prompts. Please let me know in the comments.